Face Tracking

Reliable tracking of 3D deformable faces is a challenging task in computer vision. For one, the shapes of faces change dramatically with various identities, poses and expressions. For the other, poor lighting conditions may cause a low contrast image or cast shadows on faces, which will significantly degrade the performance of the tracking system. We develop a non-intrusive system for real-time facial tracking with a single web camera.

Face Tracking with Kinect

We develop a framework to track face shapes by using both color and depth information. Since the faces in various poses lie on a nonlinear manifold, we build piecewise linear face models, each model covering a range of poses. The low-resolution depth image is captured by using Microsoft Kinect, and is used to predict head pose and generate extra constraints at the face boundary. Our experiments show that, by exploiting the depth information, the performance of the tracking system is significantly improved.

| Fig. Overview of our system. (a) A kinect sensor is used to capture both RGB and depth data. (b) The depth image is used to estimate the face orientation. (c) The face subspace of the closest pose is selected to constrain the face shape. (d) Both RGB and depth information are used to track the face. |

Facial Expression

Facial expressions play significant roles in our daily communication. Recognizing these expressions has extensive applications, such as human-computer interface, multimedia, and security. However, as the basis of expression recognition, the exploration of the underline functional facial features is still an open problem. Studies in psychology show that facial features of expressions are located around mouth, nose, and eyes, and their locations are essential for explaining and categorizing facial expressions. Moreover, expressions can be forcedly categorized into six popular "basic expressions": anger, disgust, fear, happiness, sadness and surprise. Each of these basic expressions can be further decomposed into a set of several related action units (AUs).

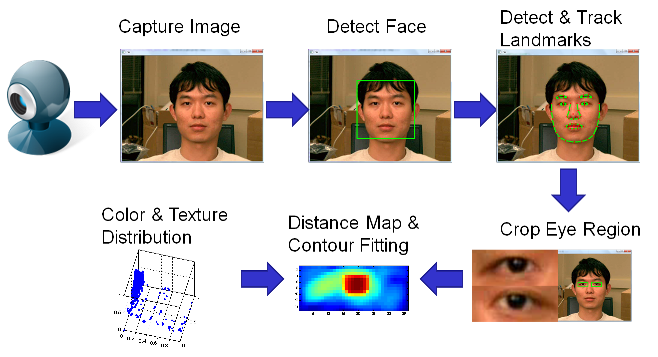

Fatigue Detection

We develop a non-intrusive system for monitoring fatigue by tracking eyelids with a single web camera. Tracking slow eyelid closures is one of the most reliable ways to monitor fatigue during critical performance tasks. The challenges come from arbitrary head movement, occlusion, reflection of glasses, motion blurs, etc. We model the shape of eyes using a pair of parameterized parabolic curves, and fit the model in each frame to maximize the total likelihood of the eye regions. Our system is able to track face movement and fit eyelids reliably in real time. We test our system with videos captured from both alert and drowsy subjects. The experiment results prove the effectiveness of our system.

|

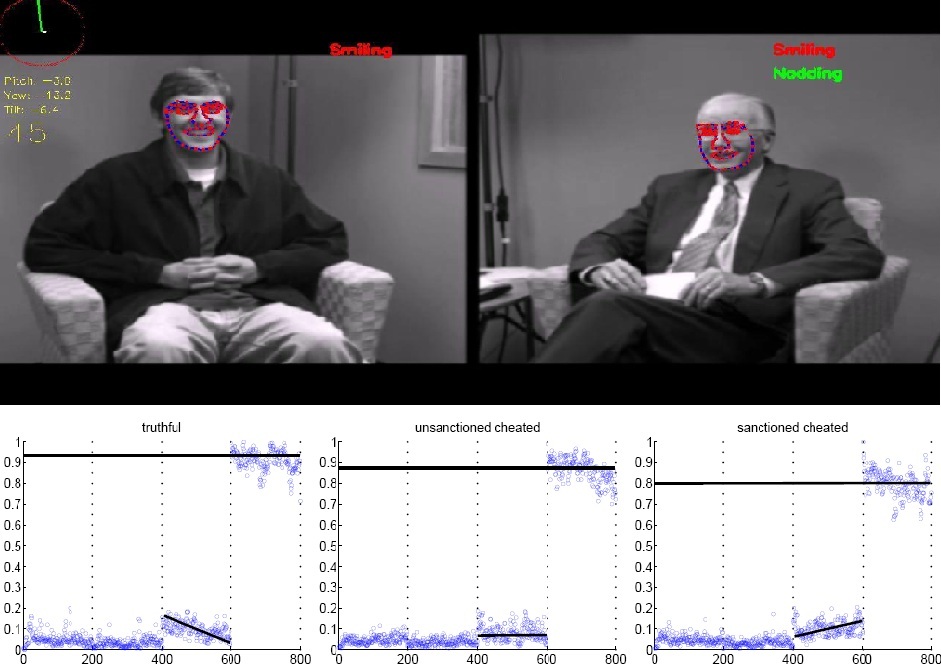

Dyadic Synchrony as a Measure of Trust and Veracity

We investigate how degree of interactional synchrony can signal whether trust is present, absent, increasing or declining. We propose an automated, data-driven and unobtrusive framework for deception detection and analysis in interrogation interviews from visual cues only. Our framework consists of the face tracking, the gesture detection, the expression recognition, and the synchrony estimation. This framework is able to automatically track gestures and expressions of both the subject and the interviewer, extract normalized meaningful synchrony features and learn classification models for deception recognition. To validate these proposed synchrony features, extensive experiments have been conducted on a database of $242$ video samples, and shown that these features are very effective at detecting deceptions.

Publications

-

Xiang Yu, Junzhou Huang, Shaoting Zhang, Dimitris Metaxas, "Face Landmark Fitting via Optimized Part Mixtures and Cascaded Deformable Model", IEEE Transactions on Pattern Analysis and Machine Intelligence, Volume 38, Issue 11, pp. 2212-2226, November 2016.

- Xi Peng, Qiong Hu, Junzhou Huang, Dimitris Metaxas, "Track Facial Points in Unconstrained Videos", The 27th British Machine Vision Conference, BMVC'16, York, UK, September 2016.

- Xi Peng, Junzhou Huang, Dimitris Metaxas, "Sequential Face Alignment via Person-Specific Modeling in the Wild", In Proc. of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, CVPRW'16, Las Vegas, USA, June 2016

-

Lin Zhong, Qingshan Liu, Peng Yang, Junzhou Huang and Dimitris Metaxas, "Learning Multi-scale Active Facial Patches for Expression Analysis", IEEE Transaction on Cybernetics, Volume 45, Number 8, pp.1499-1510, August 2015.

-

Xi Peng, Junzhou Huang, Qiong Hu, Shaoting Zhang, Ahmed Elgammal and Dimitris Metaxas, "From Circle to 3-Sphere: Head Pose Estimation by Instance Parameterization", Computer Vision and Image Understanding, Volume 136, pp.92-102, July 2015.

-

Xiang Yu, Shaoting Zhang, Zhenan Yan, Fei Yang, Junzhou Huang, Norah Dunbar, Matthew Jensen, Judee K. Burgoon and Dimitris N. Metaxas, "Is Interactional Dissynchrony a Clue to Deception? Insights from Automated Analysis of Nonverbal Visual Cues", IEEE Transactions on Cybernetics, Volume 45, Issue 3, pp. 506-520, March 2015.

-

Xi Peng, Junzhou Huang, Qiong Hu, Shaoting Zhang, Dimitris Metaxas, "Three-Dimensional Head Pose Estimation in-the-Wild", In Proc. the 11th IEEE International Conference on Automatic Face and Gesture Recognition, FG'15, Ljubljana, Slovenia, May 2015.

-

Xi Peng, Junzhou Huang, Qiong Hu, Shaoting Zhang and Dimitris N. Metaxas, "Head Pose Estimation by Instance Parameterization", In Proc. of International Conference on Pattern Recognition, ICPR'14, Stockholm, Sweden, August 2014.

-

Baiyang Liu, Junzhou Huang, Casimir Kulikowski, Lin Yang, "Robust Visual Tracking Using Local Sparse Appearance Model and K-Selection", IEEE Transactions on Pattern Analysis and Machine Intelligence, Volume 35, Issue 12, pp. 2968-2981, December 2013.

-

Xiang Yu, Junzhou Huang, Shaoting Zhang, Wang Yan and Dimitris Metaxas, "Pose-free Facial Landmark Fitting via Optimized Part Mixtures and Cascaded Deformable Shape Model", In Proc. of the 14th International Conference on Computer Vision, ICCV'13, Sydney, Australia, December 2013.

-

Xiang Yu, Shaoting Zhang, Zhennan Yan, Fei Yang, Junzhou Huang, Norah Dunbar, Matthew Jensen, Judee Burgoon and Dimitris N. Metaxas, "Is Interactional Dissynchrony a Clue to Deception: Insights from Automated Analysis of Nonverbal Visual Cues", 46th Hawaii International Conference on System Sciences, HICSS'13, Wailea, Hawaii, USA, January 2013.

-

Xiang Yu, Fei Yang, Junzhou Huang and Dimitris Metaxas, "Explicit Occlusion Detection based Deformable Fitting for Facial Landmark Localization", IEEE Conference on Automatic Face and Gesture Recognition, FG'13, Shanghai, China, April 2013.

-

Fei Yang, Junzhou Huang, Xiang Yu, Dimitris Metaxas, "Robust Face Tracking with a Consumer Depth Camera", IEEE International Conference on Image Processing, ICIP'12, Orlando, Florida, USA, September 2012.

-

Fei Yang, Xiang Yu, Junzhou Huang, Peng Yang, Dimitris Metaxas, "Robust Eyelid Tracking for Fatigue Detection", IEEE International Conference on Image Processing, ICIP'12, Orlando, Florida, USA, September 2012.

-

Lin Zhong, Qingshan Liu, Peng Yang, Bo Liu, Junzhou Huang and Dimitris Metaxas, "Learning Active Facial Patches for Expression Analysis". In Proc. of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR'12, Providence, Rhode Island, USA, June 2012.

-

Fei Yang, Junzhou Huang, Dimitris Metaxas, "Sparse Shape Registration for Occluded Facial Feature Localization", In Proc. of the 9th Conference on Automatic Face and Gesture Recognition, FG'11, Santa Barbara, California, USA, March 2011.

-

Fei Yang, Junzhou Huang, Peng Yang, Dimitris Metaxas, "Eye Localization through Multiscale Sparse Dictionaries", In Proc. the 9th Conference on Automatic Face and Gesture Recognition, FG' 11, Santa Barbara, California, USA, March 2011.